8 Survey Design Best Practices for 2025

Craft Killer Surveys with These Best Practices

Want higher response rates and more useful data? This listicle provides survey design best practices to help you create effective surveys that deliver actionable insights. Learn how to write clear questions, optimize survey length, choose the right response scales, and implement techniques like randomization and pre-testing. Whether you're a freelancer gathering leads, a marketer boosting engagement, or a researcher collecting data, these tips will maximize your survey's impact. Applying these survey design best practices improves data quality and gives you the information you need to make better decisions.

1. Use Clear and Unbiased Question Wording

The cornerstone of any successful survey lies in the clarity and impartiality of its questions. Using clear and unbiased question wording, a core tenet of survey design best practices, is the foundation upon which reliable data is built. This practice involves meticulously crafting each question to ensure it is straightforward, avoids leading the respondent towards a particular answer, and focuses on a single, well-defined concept. By adhering to this principle, you minimize respondent confusion, maximize data accuracy, and gain valuable insights that truly reflect the opinions and experiences of your target audience. Well-worded questions reduce the ambiguity that can skew results, leading to more robust and actionable data. This is crucial for everyone from freelancers gathering leads to researchers collecting data for academic studies.

This approach rests on several key features. First and foremost is the use of simple, jargon-free language accessible to all respondents, regardless of their background or familiarity with the subject matter. Each question should address only one concept, preventing respondents from feeling pressured to answer multiple queries within a single response. Maintaining a neutral tone throughout the survey is paramount, avoiding leading phrases or emotionally charged language that could sway opinions. Consistent terminology ensures clarity and prevents misunderstandings, while clear instructions and context help respondents understand the purpose and scope of each question. This is particularly important when dealing with sensitive topics or complex issues.

The advantages of employing clear and unbiased question wording are numerous. It significantly reduces measurement error and bias, leading to more accurate responses and higher-quality data. This increased reliability allows for more robust analysis and meaningful comparisons across different groups within your target audience. Enhanced respondent understanding also contributes to higher completion rates, as participants are more likely to finish a survey that is easy to follow and comprehend. For marketing teams aiming to boost conversions, clear questions in customer satisfaction surveys can pinpoint areas for improvement. Similarly, HR professionals collecting employee feedback benefit from unbiased questions to gain accurate insights into workplace dynamics.

However, achieving this level of clarity requires diligent effort. Developing unbiased and clear questions often involves extensive testing and revision, which can be time-consuming. The pursuit of simplicity might also limit the nuanced exploration of complex topics, potentially oversimplifying multifaceted issues. Finding the right balance between clarity and depth is a crucial challenge in survey design.

Numerous successful examples illustrate the power of this best practice. Gallup's political polling meticulously uses neutral phrasing, such as "Do you approve or disapprove of the way [President] is handling his job?", to avoid influencing respondents. The widely used Net Promoter Score (NPS) utilizes the standardized question "How likely are you to recommend [company] to a friend or colleague?" to gauge customer loyalty. Census surveys, a cornerstone of demographic data collection, employ extensively tested and clear questions to ensure accurate representation of the population.

To effectively implement clear and unbiased question wording in your own surveys, consider these practical tips: Avoid double-barreled questions that ask about multiple things at once. Utilize pre-testing with a diverse audience to identify and rectify any confusing language or potential biases. Replace industry jargon or technical terms with everyday language that everyone can understand. Favor active voice over passive constructions for greater clarity and directness. By following these tips and learning from established examples, you can ensure your survey questions elicit accurate and reliable data. This meticulous approach is especially beneficial for freelancers and solopreneurs seeking scalable lead-capture tools, as well as event planners managing registrations and seeking valuable attendee feedback. Ultimately, clear and unbiased question wording empowers you to gather meaningful insights and make data-driven decisions that contribute to your success.

2. Optimize Survey Length and Question Order

Optimizing survey length and question order is a crucial best practice in survey design that directly impacts both the quantity and quality of the data you collect. This involves strategically managing the number of questions, thoughtfully arranging their sequence, and creating a smooth, engaging experience for respondents. By balancing the need for comprehensive data with the risk of respondent fatigue, you can maximize completion rates and minimize biases that can skew your results. This practice is essential for anyone seeking robust and reliable data, from freelancers gathering leads to researchers conducting academic studies. Effective survey design ensures that you’re getting the most accurate insights possible.

This best practice revolves around several key features. First, it emphasizes a logical progression from broad, general questions to more specific ones, creating a natural conversational flow. This "funneling" approach helps respondents ease into the survey and prevents them from feeling overwhelmed. Second, it involves strategically placing demographic questions, often towards the end unless they are essential for screening purposes. Third, sensitive questions are positioned later in the survey after a rapport has been established, increasing the likelihood of honest responses. Fourth, the use of section breaks and clear transitions helps maintain respondent engagement and provides a visual cue of progress. Finally, communicating the estimated completion time upfront manages expectations and encourages completion.

The benefits of optimizing survey length and question order are numerous. Higher completion rates are a direct result of minimizing respondent fatigue. When surveys are concise and engaging, respondents are more likely to finish them. This, in turn, leads to better data quality throughout the survey, as responses are more thoughtful and complete. Furthermore, a well-structured survey minimizes order bias effects, which occur when the placement of a question influences subsequent responses. Overall, a positive respondent experience is fostered, encouraging participation in future surveys and building trust with your audience. Learn more about Optimize Survey Length and Question Order

While the advantages are significant, some challenges exist. Creating a concise survey may require developing multiple versions to gather comprehensive data across different segments. Complex topics might necessitate longer surveys, requiring careful balancing of length and engagement. Determining the optimal length for all audiences can also be difficult, as attention spans and motivations vary. While proper sequencing minimizes order effects, they can still occur, requiring careful analysis and interpretation of results.

Several successful implementations of this best practice demonstrate its effectiveness. SurveyMonkey, a popular online survey platform, recommends keeping surveys to 10-15 questions for maximum engagement, acknowledging the limitations of respondent attention spans. In contrast, large-scale academic longitudinal studies like the Panel Study of Income Dynamics utilize a modular design, breaking down complex surveys into smaller, manageable sections administered over time. Customer satisfaction surveys typically start with a general question about overall experience before delving into specific touchpoints, adhering to the principle of moving from broad to specific.

To effectively optimize your survey length and question order, consider these actionable tips: Aim for a 5-10 minute completion time for general audiences, recognizing the value of brevity. Use progress indicators to manage respondent expectations and provide a sense of accomplishment. Group related questions into logical sections with clear headings and transitions. Place screening questions early to filter out irrelevant respondents. Finally, consider ending with open-ended questions to gather richer, qualitative insights.

This best practice has been championed by prominent figures in survey research. Jon Krosnick, a renowned survey researcher at Stanford University, has extensively studied the impact of question order and length on data quality. Roger Tourangeau, a leading expert in survey psychology, has also made significant contributions to understanding the cognitive processes involved in survey response. The Pew Research Center methodology team consistently emphasizes the importance of these principles in their rigorous survey work. By adhering to these guidelines, you can significantly enhance the effectiveness of your surveys and gather high-quality data to inform your decisions.

3. Implement Appropriate Response Scale Design

Effective survey design hinges on more than just asking the right questions; it's also about providing respondents with the right way to answer them. Implementing appropriate response scale design is a crucial best practice that significantly impacts data quality, analysis, and the overall success of your survey. This involves carefully selecting the optimal number of response options, the format of the scale, and ensuring it aligns with the specific construct you are trying to measure. By paying attention to these details, you empower respondents to provide accurate and meaningful data, ultimately leading to more robust and reliable results. This best practice deserves its place in the list because it directly influences the precision and interpretability of your survey data.

Response scale design encompasses several key features. These include:

- Balanced positive and negative options: This allows respondents to express both favorable and unfavorable sentiments, providing a more complete picture of their perspectives.

- Clear anchor points and labels: Anchors define the extremes of the scale and help respondents understand the meaning of each point. Clear labels minimize ambiguity and ensure consistent interpretation.

- Appropriate scale length (typically 5-7 points): While longer scales might seem to offer greater precision, they can overwhelm respondents and increase cognitive burden. 5-7 points generally provide sufficient discrimination without being overly complex.

- Consistent scale direction throughout the survey: Maintaining a consistent direction (e.g., positive to negative or vice versa) prevents confusion and improves response accuracy.

- Include neutral/don't know options when appropriate: Offering a neutral option allows respondents to express a lack of opinion or knowledge, preventing them from being forced to choose a response that doesn't accurately reflect their view.

Implementing appropriate response scales offers several advantages:

- Improves measurement precision: Well-designed scales allow for finer distinctions between responses, leading to more accurate data.

- Reduces response burden: Appropriate scale length and clear labeling make it easier for respondents to answer questions quickly and accurately.

- Enables statistical analysis: Standardized scales allow for various statistical analyses, facilitating data interpretation and reporting.

- Provides standardized comparison points: Consistent scales across different questions or surveys enable meaningful comparisons and trend analysis.

- Reduces acquiescence bias: Balanced scales help mitigate the tendency of respondents to agree with statements regardless of their actual opinion.

However, there are also potential drawbacks to consider:

- May force responses that don't reflect true opinions: If a respondent's true opinion falls outside the provided options, they may be forced to choose a less accurate response.

- Cultural differences in scale interpretation: The meaning assigned to different scale points can vary across cultures, requiring careful consideration of the target population.

- Potential for central tendency bias: Respondents may gravitate towards the middle of the scale, especially if they are unsure or hesitant to express strong opinions.

- Limited nuance in responses: Predefined scales may not capture the full complexity of individual opinions or experiences.

Examples of successful response scale implementation include Likert scales (Strongly Disagree to Strongly Agree) frequently used in attitude research, the Net Promoter Score's 0-10 likelihood scale for measuring customer loyalty, customer satisfaction surveys utilizing 5-point scales (Very Dissatisfied to Very Satisfied), and academic course evaluations employing 7-point effectiveness scales. These examples demonstrate the versatility and adaptability of response scales across different contexts.

To effectively implement appropriate response scales in your surveys, consider the following tips:

- Use 5-7 point scales for optimal discrimination.

- Keep scale direction consistent (positive to negative or vice versa).

- Provide verbal labels for all or key scale points.

- Consider cultural context when designing scales.

- Test scales with your target population before full deployment.

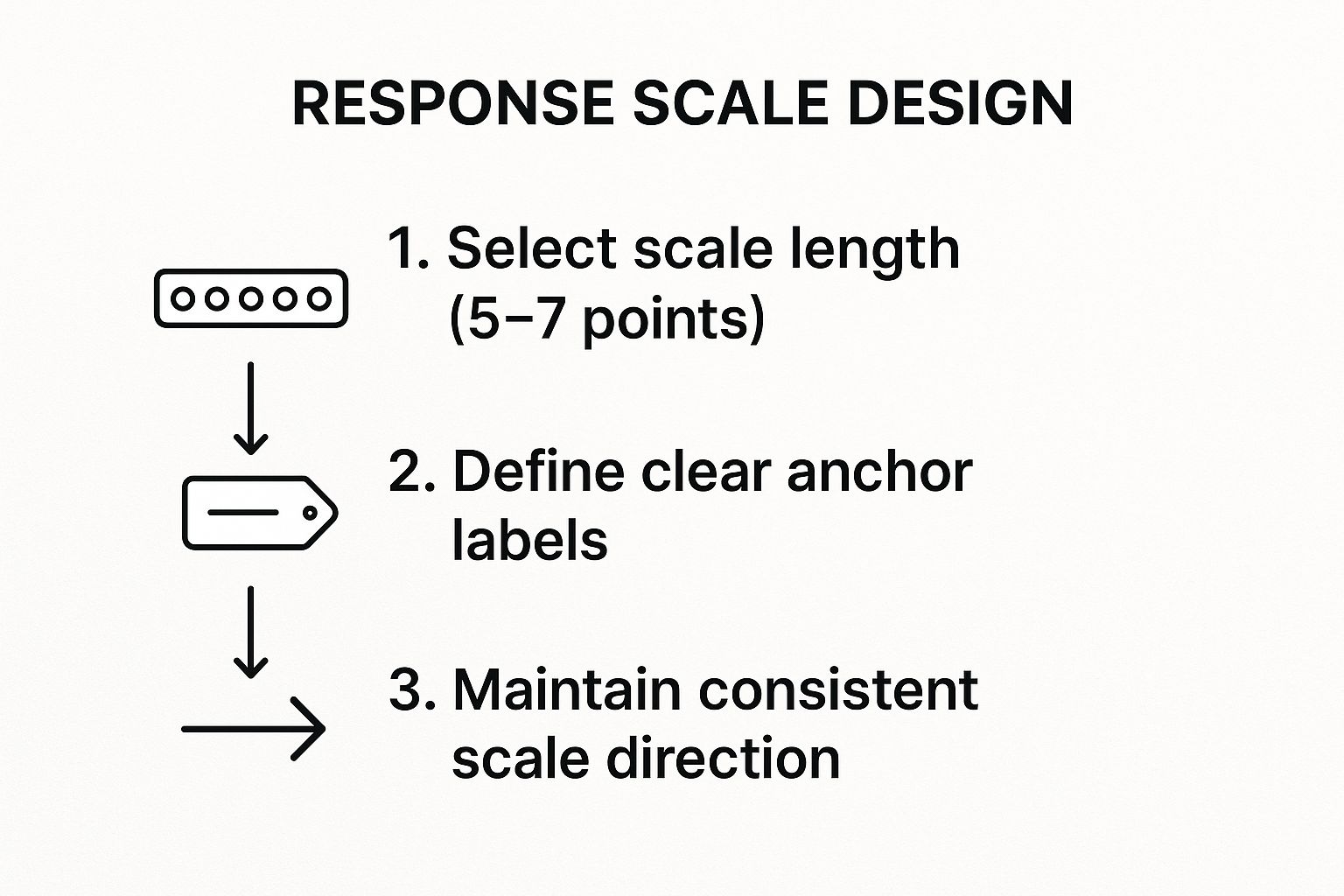

The following infographic illustrates the core steps involved in designing effective response scales:

This infographic visualizes the three essential steps in response scale design: selecting an appropriate scale length (ideally between 5 and 7 points), defining clear and concise anchor labels for each point on the scale, and maintaining a consistent scale direction throughout the entire survey.

By following these steps sequentially, as highlighted in the infographic, you can create clear and effective response scales that minimize respondent confusion and maximize data quality. This process ensures that your scales are easy to understand, consistently applied, and contribute to the overall reliability of your survey results. Implementing these best practices in response scale design will greatly enhance the value and actionability of the data you collect.

4. Use Randomization and Counterbalancing Techniques

Randomization and counterbalancing are crucial survey design best practices that help minimize bias and ensure the validity of your findings. They represent a set of techniques used to control the potential influence of question order, response option order, and other systematic biases that can inadvertently skew your data. Implementing these techniques strengthens the integrity of your survey, leading to more reliable and actionable insights. Whether you're a freelancer gathering leads, a marketer assessing campaign effectiveness, or an academic researcher collecting data, understanding and applying these techniques is essential for obtaining accurate and unbiased results.

This approach revolves around the core principle of randomly varying the presentation of survey elements to different respondents. This might involve shuffling the order of response options for multiple-choice questions, rotating the sequence of questions within the survey, or even creating different versions of the survey with varied question orders. By doing so, you mitigate the risk of "order effects," which occur when the position of a question or response option influences how participants answer.

How Randomization and Counterbalancing Work:

Several methods contribute to effective randomization and counterbalancing in survey design:

- Random Rotation of Response Options: For questions with multiple choices, the order in which options are presented can influence selection. Randomizing the order for each respondent ensures that no single option benefits from its position.

- Multiple Survey Versions with Different Question Orders: Creating multiple versions of your survey, each with a different question order, helps control for the impact of preceding questions on subsequent responses. This is especially important when dealing with sensitive or potentially leading questions.

- Balanced Presentation of Positive/Negative Items: If your survey includes items measuring opposite constructs (e.g., satisfaction vs. dissatisfaction), balancing their presentation order helps prevent response bias. For instance, a block of negative items followed by a positive item might lead to a more negative overall perception.

- Randomized Assignment of Respondents to Versions: When using multiple survey versions, it's crucial to randomly assign participants to each version. This ensures an even distribution of potential biases across the sample.

Examples of Successful Implementation:

The benefits of randomization and counterbalancing are evident across various fields:

- Political Polls: Candidate names are often randomized on ballots and in survey questions to avoid "ballot order effects," where candidates listed first may have an advantage.

- Market Research: Rotating product features in surveys helps isolate consumer preferences and determine which features truly drive purchase decisions.

- Academic Research: Researchers frequently employ Latin square designs, a form of counterbalancing, to control for order effects in complex experiments involving multiple conditions.

- A/B Testing in Online Surveys: Different question presentations can be tested simultaneously using A/B testing to identify the most effective phrasing and formatting for maximizing response rates and data quality.

Actionable Tips for Implementing Randomization and Counterbalancing:

- Randomize Response Options: Use software features or programming scripts to randomize response options for multiple-choice questions automatically.

- Use Systematic Rotation for Matrix Questions: For matrix-style questions, rotate the order of rows or columns systematically to counterbalance potential position effects.

- Consider Split-Ballot Experiments: When exploring controversial or sensitive topics, split-ballot experiments, where different versions of a question are presented to different subsets of respondents, can be highly effective.

- Document Randomization Procedures: Keep detailed records of your randomization procedures to ensure transparency and facilitate data analysis.

- Test Randomization Functionality: Thoroughly test your survey before launching to ensure that randomization features are working correctly.

Pros and Cons:

Pros:

- Eliminates systematic bias from question/option order

- Improves the validity of results

- Enables fair testing of all options

- Reduces acquiescence bias (tendency to agree with all statements)

- Strengthens causal inferences

Cons:

- Increases survey complexity and cost

- May require larger sample sizes for analysis

- Can complicate data analysis procedures

- Not necessary for all survey types (e.g., simple demographic surveys)

When to Use Randomization and Counterbalancing:

While not essential for all surveys, randomization and counterbalancing become increasingly important as the complexity and sensitivity of your research increase. They are particularly valuable when:

- Investigating potentially sensitive or controversial topics

- Measuring attitudes, opinions, and preferences

- Comparing different products, services, or interventions

- Conducting experimental or quasi-experimental research

By incorporating these survey design best practices, you enhance the credibility of your data and contribute to more robust and meaningful conclusions. Pioneers like Donald Campbell and Thomas Cook have significantly shaped our understanding of experimental design and the importance of controlling for bias, paving the way for more rigorous and reliable research methodologies. Their work underscores the value of randomization and counterbalancing in achieving valid and trustworthy survey results.

5. Design Mobile-Friendly and Accessible Surveys

In today's digitally driven world, mobile devices have become the primary means of accessing information and interacting online. This shift in user behavior necessitates a mobile-first approach to survey design. Designing mobile-friendly and accessible surveys is no longer a bonus but a crucial best practice for maximizing reach, ensuring inclusivity, and gathering high-quality data. This approach involves creating surveys that function flawlessly across a wide range of devices, cater to individuals with varying abilities, and adhere to web accessibility guidelines. Implementing this strategy is essential for gathering representative data and ensuring equal opportunities for participation. Ignoring mobile optimization and accessibility risks alienating a substantial portion of your target audience and compromising the validity of your research.

Mobile-friendly design, in particular, has become paramount as smartphone usage continues to rise. Many individuals now complete surveys exclusively on their mobile devices, making it crucial that your surveys render correctly and provide a seamless user experience on smaller screens. Accessibility, on the other hand, addresses the needs of users with disabilities, ensuring that everyone, regardless of their abilities, can participate in your surveys. This includes individuals using screen readers, keyboard navigation, or those requiring high contrast and larger fonts.

Features of a well-designed mobile-friendly and accessible survey include responsive design that adapts to different screen sizes, touch-friendly interface elements for easy interaction on touchscreens, screen reader compatibility for users with visual impairments, high contrast options and readable fonts for enhanced visibility, and simplified navigation designed for smaller screens. For example, long, scrolling questions should be avoided in favor of concise, easily digestible formats. Radio buttons and checkboxes should be large enough to tap accurately on a touchscreen.

The benefits of embracing this approach are numerous. Reaching a broader and more diverse audience is key. By catering to both mobile users and individuals with disabilities, you significantly expand your potential respondent pool and gather more representative data. This inclusivity also leads to higher response rates from mobile users, who are more likely to complete a survey that is easy to access and navigate on their preferred device. Furthermore, adopting accessible design practices helps ensure compliance with accessibility regulations, mitigating legal risks and demonstrating a commitment to inclusivity. Finally, mobile-first and accessible design future-proofs your survey design, ensuring it remains relevant and effective as technology evolves.

However, implementing these best practices does come with some challenges. Developing mobile-friendly and accessible surveys can require additional development time and cost compared to traditional desktop-focused surveys. It may also limit the use of certain complex question types that are difficult to render or interact with on mobile devices. Thorough cross-platform testing is essential to ensure consistent functionality and user experience across various devices and operating systems. Finally, older devices with limited processing power or outdated software may present technical challenges.

Numerous organizations have successfully implemented mobile-first and accessible survey design. Qualtrics, a leading survey platform, offers mobile-optimized survey templates to streamline the design process. The US Census Bureau adopted a mobile-first approach for the 2020 Census, recognizing the increasing reliance on mobile devices. The Pew Research Center also utilizes mobile-responsive survey design for its research. In the academic sphere, many institutions are leveraging accessible survey platforms to gather student feedback.

To effectively design mobile-friendly and accessible surveys, follow these actionable tips: test your surveys on multiple devices and browsers to ensure compatibility and identify potential issues; use large, touch-friendly buttons and response areas for easy interaction on touchscreens; implement proper heading structure for screen readers to navigate the content effectively; provide alternative text for images and graphics so users with visual impairments can understand their context; and ensure sufficient color contrast for visibility, particularly for users with low vision.

The importance of mobile-friendly and accessible survey design has been championed by various organizations and individuals. The Web Content Accessibility Guidelines (WCAG) consortium provides comprehensive guidelines for web accessibility, which can be applied to survey design. Mobile-first design advocates like Luke Wroblewski have highlighted the importance of prioritizing mobile users. Survey platform companies like Qualtrics and SurveyMonkey have also played a key role in popularizing these best practices by offering mobile-optimized features and resources. By adopting these practices, you can ensure your surveys are inclusive, effective, and reach the widest possible audience, ultimately leading to more valuable and representative data.

6. Conduct Thorough Pre-testing and Pilot Studies

Pre-testing and pilot studies are indispensable steps in survey design best practices, acting as a critical quality assurance check before launching your survey to the wider target population. This process involves administering your survey to a smaller, representative group and gathering feedback to identify and rectify any potential issues. By investing time in these preliminary stages, you can prevent costly errors, improve data quality, and ensure a smoother experience for your respondents. This practice is especially crucial for freelancers, solopreneurs, marketing teams, researchers, and anyone seeking reliable data from their surveys.

Pre-testing and pilot studies essentially involve a dress rehearsal of your survey. This allows you to identify confusing questions, technical glitches, and any other problems that could compromise the integrity of your data. The process typically involves several stages:

-

Cognitive Interviewing: This technique involves asking respondents to verbalize their thought processes as they answer survey questions. This helps uncover any misinterpretations, ambiguities, or difficulties in understanding the questions. It provides invaluable insights into how your target audience interprets the language and framing of your survey.

-

Small-Scale Pilot Testing: After refining the survey based on cognitive interviews, a small-scale pilot test is conducted with a group representative of your target audience. This simulates the actual survey administration and allows you to assess the survey's flow, identify any remaining problematic questions, and evaluate completion rates.

-

Technical Testing: In today's digital landscape, surveys are frequently administered online or through mobile devices. Technical testing ensures your survey functions correctly across different platforms, browsers, and devices. This includes checking for compatibility issues, ensuring proper display formatting, and validating any interactive elements.

-

Analysis of Completion Rates and Response Patterns: Analyzing data from the pilot study provides valuable insights into respondent behavior. Low completion rates can indicate issues with survey length, confusing questions, or technical difficulties. Examining response patterns can reveal inconsistencies or unexpected results that might point to underlying problems with question wording or survey structure.

-

Iterative Refinement Based on Feedback: The feedback gathered through all these stages is used to iteratively refine the survey. This may involve revising question wording, clarifying instructions, adjusting the survey flow, or addressing technical issues. This iterative process ensures the final survey is clear, engaging, and effectively captures the desired information.

The benefits of pre-testing and pilot studies are numerous. They identify confusing or problematic questions early on, saving you from having to re-field the survey or deal with unusable data. They also improve the survey flow and user experience, leading to higher completion rates and better data quality. By addressing potential issues in advance, pre-testing reduces non-response and measurement error, ultimately saving you time and resources in the long run.

However, pre-testing also has some drawbacks. It requires additional time and resources upfront, which can be a constraint for projects with tight deadlines or limited budgets. Furthermore, the feedback received may necessitate significant survey changes, potentially requiring a complete overhaul of certain sections. It’s also important to remember that pilot participants cannot be included in the main study. Lastly, while pre-testing helps identify many potential issues, it may not capture every single problem that could arise during the full deployment.

Successful implementation of pre-testing and pilot studies can be seen across various sectors. The US Census Bureau conducts extensive field testing before its decennial surveys to ensure accurate and reliable data collection. Gallup uses cognitive interviewing for new question development, ensuring their polls accurately reflect public opinion. Academic researchers regularly conduct pilot studies before major data collection efforts to validate their research instruments. Market research firms also utilize focus groups and pilot tests to refine their surveys and ensure they effectively capture consumer insights.

For those implementing pre-testing and pilot studies, here are some actionable tips: recruit pilot participants who closely resemble your target population; use think-aloud protocols during cognitive interviews to gain deeper insights into respondent thinking; test both the content and technical functionality of your survey; systematically document and analyze all feedback received; and plan multiple rounds of testing, especially for complex surveys. By following these best practices, you can maximize the benefits of pre-testing and ensure your survey is robust, reliable, and yields high-quality data. This approach is particularly valuable for freelancers, solopreneurs, and smaller teams seeking scalable lead capture tools or conducting market research, as it ensures efficient use of limited resources while maximizing data accuracy.

7. Implement Strategic Sampling and Recruitment Methods

Effective survey design hinges not only on crafting insightful questions but also on reaching the right respondents. This is where strategic sampling and recruitment methods come into play, representing a crucial best practice for any survey project. Implementing these methods ensures that your collected data is representative of your target population, allowing you to draw valid conclusions and make informed decisions. This isn't simply about sending out as many surveys as possible; it's about reaching the right people in a systematic way.

Strategic sampling and recruitment encompass a range of procedures designed to identify and recruit a participant group that accurately reflects the characteristics of the larger population you're studying. This careful selection process allows you to generalize your findings with confidence, knowing they hold true beyond your sample group. This approach is vital for maximizing the value of your survey data and ensuring the robustness of your conclusions.

Key Features of Effective Sampling and Recruitment:

- Clearly Defined Target Population: Before embarking on your sampling journey, you must precisely define the population you wish to study. This clarity is fundamental for selecting the right sampling method and ensuring relevant data collection. Are you targeting small business owners, stay-at-home parents, or a specific demographic within a larger market? Defining this scope is paramount.

- Appropriate Sampling Method Selection: Various sampling methods exist, each with its strengths and weaknesses. Probability sampling methods, such as simple random sampling, stratified sampling, and cluster sampling, offer strong generalizability when executed correctly. Non-probability methods, like convenience sampling or snowball sampling, may be more practical in certain situations but limit the generalizability of your findings. Choosing the right method depends heavily on your research goals and resources.

- Statistical Power Calculations for Sample Size: Determining the appropriate sample size is crucial for statistical validity. Too small a sample may lead to inaccurate conclusions, while an excessively large sample can be wasteful of resources. Statistical power calculations help you identify the minimum sample size needed to detect meaningful effects within your population.

- Multiple Recruitment Channels and Methods: To reach your target population effectively, utilize a diverse range of recruitment channels. This could include email invitations, social media outreach, online panels, phone calls, or even in-person recruitment depending on your target group. Diversifying your methods increases the likelihood of reaching a representative sample and minimizing selection bias.

- Tracking and Management of Response Rates: Monitor your response rates closely throughout the survey process. Low response rates can introduce bias and limit the generalizability of your findings. Implement strategies to improve response rates, such as sending reminders, offering incentives, or simplifying the survey design.

Henri Den offers excellent resources on reaching the right audience, and their insights on strategic sampling and recruitment methods can provide valuable context for planning your survey outreach. Reaching the right audience for your survey is crucial for gathering valuable data. Choosing the right sampling and recruitment methods can significantly impact your results. Understanding your audience deeply, as highlighted by Henri Den, is a cornerstone of effective survey design best practices.

Pros and Cons of Strategic Sampling and Recruitment:

Pros:

- Enables generalization to the broader population

- Provides a statistical basis for conclusions

- Improves the representativeness of results

- Allows for subgroup analysis

- Supports the validity of findings

Cons:

- Can be expensive and time-consuming

- May be difficult to reach certain populations

- Requires expertise in sampling methodology

- Non-response bias can still occur

Examples of Successful Implementation:

- Gallup's probability sampling for political polls

- Pew Research Center's dual-frame telephone sampling

- Academic longitudinal studies with stratified sampling

- Market research panels with quota sampling methods

Tips for Implementing Strategic Sampling and Recruitment:

- Define your target population clearly before sampling.

- Use probability sampling when generalization is important.

- Calculate sample size based on the desired precision.

- Plan for non-response with oversample strategies.

- Document sampling procedures for transparency.

By meticulously implementing these survey design best practices, you ensure the quality and reliability of your survey data, allowing you to derive meaningful insights and make informed decisions based on representative findings. Strategic sampling and recruitment is not just a statistical exercise; it's a crucial step in ensuring your survey delivers actionable results.

8. Plan Comprehensive Data Management and Analysis Strategy

A robust survey isn't just about asking the right questions; it's about knowing what you'll do with the answers. Among survey design best practices, planning a comprehensive data management and analysis strategy stands out as crucial, albeit often overlooked. This critical step, implemented from the project's inception, encompasses data collection, storage, cleaning, analysis, and even eventual archiving. Without a solid strategy, you risk encountering roadblocks during analysis, potentially rendering your collected data useless for answering your research questions. This foresight ensures that your efforts translate into actionable insights, ultimately maximizing the value of your survey.

This approach involves several key components: establishing data quality checks throughout the process, developing an analysis plan directly aligned with your research objectives, and ensuring compliance with relevant privacy regulations. Think of it as building a blueprint for your data's journey, from the moment a respondent clicks "submit" to the final report.

A pre-planned data management strategy incorporates several essential features:

- Pre-planned Data Cleaning and Validation Procedures: Define clear procedures to handle missing data, outliers, and inconsistencies before you even launch your survey. This proactive approach saves you significant time and frustration later.

- Analysis Plan Aligned with Research Objectives: Your analysis shouldn't be an afterthought. Decide which statistical methods you'll use and how you'll interpret the results before collecting any data. This ensures your analysis directly addresses your research questions.

- Data Security and Privacy Compliance Measures: In today's data-sensitive environment, protecting respondent data is paramount. Implement appropriate security measures and ensure your survey complies with regulations like GDPR and CCPA.

- Quality Control Checkpoints Throughout Collection: Don't wait until the end to check your data. Implement regular quality checks during the collection phase to identify and address any potential issues early on.

- Documentation of All Data Processing Steps: Maintain a detailed record of every step in your data processing pipeline. This ensures transparency, facilitates reproducibility, and allows for easy troubleshooting.

Implementing a comprehensive data strategy offers numerous benefits:

- Ensures Data Quality and Integrity: By proactively addressing potential data issues, you maintain the accuracy and reliability of your results.

- Enables Efficient Analysis and Reporting: A well-defined plan streamlines the analysis process, allowing you to generate insights more quickly.

- Reduces Errors in Data Interpretation: A clear analysis plan minimizes the risk of misinterpreting your findings.

- Supports Reproducible Research: Detailed documentation enables others to replicate your analysis, enhancing the credibility of your research.

- Facilitates Compliance with Regulations: A proactive approach to data security and privacy simplifies compliance with relevant regulations.

However, this approach also has some drawbacks:

- Requires Significant Upfront Planning: Developing a comprehensive strategy takes time and effort before you even launch your survey.

- May Limit Exploratory Analysis Opportunities: While a pre-defined plan is essential, it can sometimes restrict flexibility in exploring unexpected findings.

- Needs Expertise in Data Management: Implementing this approach effectively may require specific skills in data management and analysis.

- Can Be Resource-Intensive to Implement Properly: Depending on the complexity of your survey, implementing a comprehensive strategy may require significant resources.

Examples of successful implementation include: clinical research data management systems with built-in validation rules, government survey programs with standardized processing protocols, academic research using pre-registered analysis plans, and market research with automated data quality monitoring. These examples demonstrate the value of a well-defined data strategy across diverse fields.

For freelancers and solopreneurs, marketing teams, event planners, HR professionals, researchers, and educators alike, planning your data analysis strategy upfront is critical for survey design best practices. Here are some actionable tips to get you started:

- Create a Data Dictionary Before Survey Launch: Define each variable and its possible values to ensure clarity and consistency.

- Establish Validation Rules for Data Entry: Implement rules to prevent invalid responses and ensure data integrity.

- Plan Your Analysis Strategy During the Survey Design Phase: Determine your analytical approach before you collect any data.

- Implement Regular Data Quality Monitoring: Check your data regularly for errors and inconsistencies.

- Document All Data Cleaning and Processing Decisions: Maintain a detailed record of all data manipulation steps.

Learn more about Plan Comprehensive Data Management and Analysis Strategy This resource can provide valuable insights into different aspects of data analysis.

By incorporating these best practices, you can ensure your survey yields reliable, actionable insights that inform decision-making and drive success. A well-planned data management and analysis strategy isn't just a best practice; it's an investment in the value and integrity of your research.

8 Best Practices Comparison

| Best Practice | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Use Clear and Unbiased Question Wording | Medium – requires testing & revision | Moderate – time for careful crafting | High – improves data quality and reliability | Surveys needing precise, unbiased data | Reduces bias; enhances respondent understanding |

| Optimize Survey Length and Question Order | Medium – balancing length & flow | Moderate – multiple versions/testing | High – better response rates & quality | Surveys prone to fatigue or drop-off | Reduces fatigue; improves completion rates |

| Implement Appropriate Response Scale Design | Low to Medium – design of scales | Low – scale selection & labeling | High – better measurement precision | Attitude, satisfaction, and rating surveys | Standardizes comparisons; reduces bias |

| Use Randomization and Counterbalancing Techniques | High – complex setup & multiple versions | High – larger samples & analysis effort | High – reduces order bias; improves validity | Complex or experimental surveys | Eliminates systematic biases; strengthens causal inferences |

| Design Mobile-Friendly and Accessible Surveys | Medium – technical design & testing | Moderate to High – development/testing | High – broader reach & compliance | Surveys targeting diverse/mobile users | Increases accessibility; future-proofs surveys |

| Conduct Thorough Pre-testing and Pilot Studies | Medium to High – iterative testing phase | Moderate – time and participant recruitment | High – identifies issues early; improves flow | All surveys requiring high data quality | Reduces errors; validates questions and tech |

| Implement Strategic Sampling and Recruitment Methods | High – statistical expertise & planning | High – recruitment efforts and costs | High – generalizable and representative data | Surveys needing population representation | Improves representativeness; supports validity |

| Plan Comprehensive Data Management and Analysis Strategy | High – upfront planning & expertise | High – tools, protocols, and personnel | High – ensures data integrity & efficient analysis | All surveys with complex data needs | Ensures data quality; supports reproducibility |

Ready to Create Your Best Survey Yet?

By now, you should have a solid grasp of survey design best practices. From crafting clear and unbiased questions to optimizing survey length, implementing appropriate response scales, and employing randomization techniques, each step plays a vital role in gathering reliable and actionable data. Remember, mobile-friendly design, accessibility, pre-testing, and strategic sampling are also critical components of effective survey design. Mastering these survey design best practices empowers you to collect high-quality data that can inform critical decisions, improve customer satisfaction, optimize marketing campaigns, streamline HR processes, and drive meaningful research outcomes. Whether you're a freelancer seeking leads, a marketer boosting conversions, an event planner managing registrations, or a researcher collecting data, these practices are essential for success.

Implementing these insights can significantly impact your ability to gain valuable insights from your target audience. By focusing on these core principles, you'll be well-equipped to create surveys that not only yield higher response rates but also deliver data you can trust.

Ready to put these survey design best practices into action and streamline your entire survey process? BuildForm offers AI-powered features designed to help you optimize every step, from design and analysis to data management. Explore the power of AI-driven survey creation and start building impactful surveys today! BuildForm